TECHNICAL CONSIDERATIONS

The third step is deciding on the technical aspects of your M&E plan. There are factors that are important to determine in the beginning of an M&E plan, as they have financial and human resource implications. Below the following issues are addressed:

Timing

Project cycle & seasonality

Resources

Human and financial

Strength of Evidence

Level of causal attribution

Ideally, an M&E plan will be developed before the design of SBCC interventions and program activities start; however, if your program has already begun, you can still develop an M&E plan. Points to keep in mind:

- Malaria Indicator Surveys (MIS) are fielded during the rainy season in countries with seasonal transmission. Demographic Health Surveys (DHS) and Multiple Indicator Surveys (MICS) are fielded in the dry season. These surveys may not reflect SBCC intervention-specific areas, and should not be used as stand-alone measures of success. If they are used, surveys fielded in different seasons should not be compared, particularly when looking at case management behaviors. For example, care-seeking for fever may be delayed in dry seasons, which will be reflected in DHS and/or MICS surveys. Comparing DHS and/or MICS surveys with MIS data might give a false sense of increases in prompt care-seeking.

- Prompt care-seeking for fever, RDT use and provider adherence to diagnosis and treatment protocol might vary by season as well. For this reason, baseline and end line surveys should not be planned to take place in different seasons. Additionally, pre-post survey designs do not provide important contextual factors like previously existing upward or downward trends in behavior and cannot adjust for variances in commodity availability (stock-outs) or annual rainfall. Use of study designs that employ two baselines or track different clusters over time at multiple points will more accurately describe such underlying trends and changes in behavior between seasons.

Many donors recommend dedicating at least 10% of a project's budget to M&E activities.

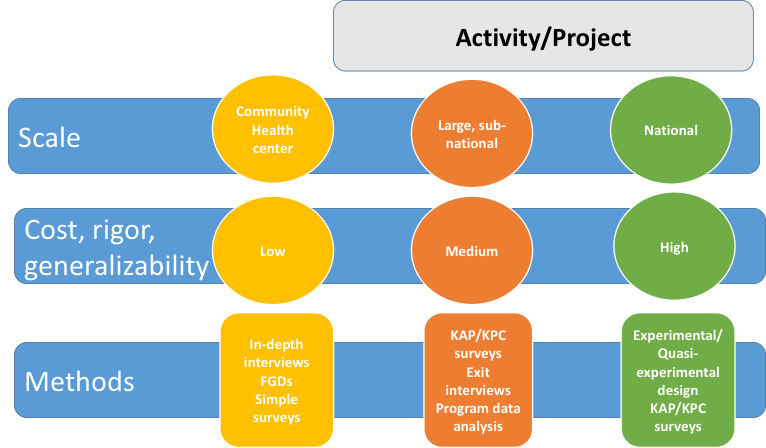

Costs will vary depending on the rigor of the evaluation, population size, and SBCC intervention. In general, population-based household surveys are more expensive than using smaller surveys or secondary data analysis. Measuring smaller numbers of service providers (when examining adherence to RDTs, for example) will be far less expensive than determining the proportion of caregivers who sought a test for fever. Determining proportions of febrile children tested with an RDT will be quite costly, as the numbers of those surveyed sufficient to reach statistical significance for this outcome are very high. Most of the costs associated with surveys are staff time, per diem, lodging, travel expenses, fuel, and associated administrative costs including training and materials. Consider the following when estimating costs:

Examples of Program Functions and Associated Costs

When asked to prove that an SBCC intervention or program caused changes in behavior it is necessary to develop a rigorous evaluation design. In SBCC, strong study designs compare exposed and unexposed groups of people, attempting to prove a cause and effect relationship (causal attribution). While measuring exposure and changes in behavior is common, determining how directly SBCC activities were responsible for this change requires a greater level of preparation. For this reason, study methodology alone is not an indication of strong evidence. For example, while randomized controlled trials are a respected study methodology in general, use of this study design in community-level SBCC interventions is inappropriate if and when it is important to know how an intervention works. On the other hand, randomized controlled trials are often and appropriately employed when SMS messages are used as part of service provider SBCC case management interventions. Pros and cons of a number of study designs are described in the next section of this I-Kit. Keep the following considerations in mind when determining the level of causal attribution you wish to demonstrate.

- A greater frequency of surveys will more accurately describe the effects of an SBCC intervention

- Randomization, sampling approach, and appropriately calculated sample sizes ensure results are representative

- When comparing exposed with unexposed, the greater similarity between groups, the less likely changes are due to non-intervention factors (wealth, gender etc).

- Methods of controlling for non-intervention factors, such as difference in differences or propensity score matching techniques, reduce the risk that changes are due to non-intervention factors